Unlocking the Power of Video with Twelve Labs

I was sitting at a little coffee shop in the Outer Sunset when the table next to me erupted with an overwhelming chorus of “I know’s!” and “so true’s.” What was so universally relatable? The statement “it is completely impossible to search for videos on TikTok.”

I immediately thought about a parallel of my own, managing travel videos in Drive. To say that search is difficult on that platform would be a gross understatement. I briefly tried making content in Premier Pro and found sifting through media so cumbersome that I hadn’t opened the application since.

“Our world is totally dominated by video content, and yet most software programs treat these clips as largely black boxes.”

— Kelly Toole, Index Ventures

Our world is totally dominated by video content, and yet most software programs treat these clips as largely black boxes. Applications rely on simple metadata like title, description, and tags in order to build features and intelligence. This limited level of video understanding simply hasn’t kept up with the world’s ever-growing focus on content. This often leads to exactly the kinds of poor user experiences that this group of coffee-goers was lamenting.

Fast forward to the tail end of 2021. I’m on Zoom, eagerly waiting to meet the winners of the video retrieval track of the ICCV VALUE (Video-And-Language Understanding Evaluation) Challenge hosted by Microsoft. During challenges like these, some of the greatest minds in research from all over the world go head-to-head with one another, competing to produce the most effective AI model for the task at hand. In this case, the task was to identify the precise moment within a sea of videos that matched a prompt.

Jae, Aiden, and Soyoung joined the video meeting and launched into their Twelve Labs story. I was surprised to learn that it was just a small group of machine learning engineers behind the submission that outmatched major global AI heavyweights like Tencent, Microsoft, and Baidu. Though, the more time I spent with Jae, Aiden, and Soyoung, the less surprising it seemed.

Their work is fueled by exceptional thoughtfulness and resolve. They care deeply about getting to the bottom of the real pain for customers, and solving these problems in a way that encapsulates cutting-edge research behind a developer friendly interface. The three are determined to make this new level of deep video understanding – one built on recent breakthroughs in computer vision and natural language processing – accessible to everyone.

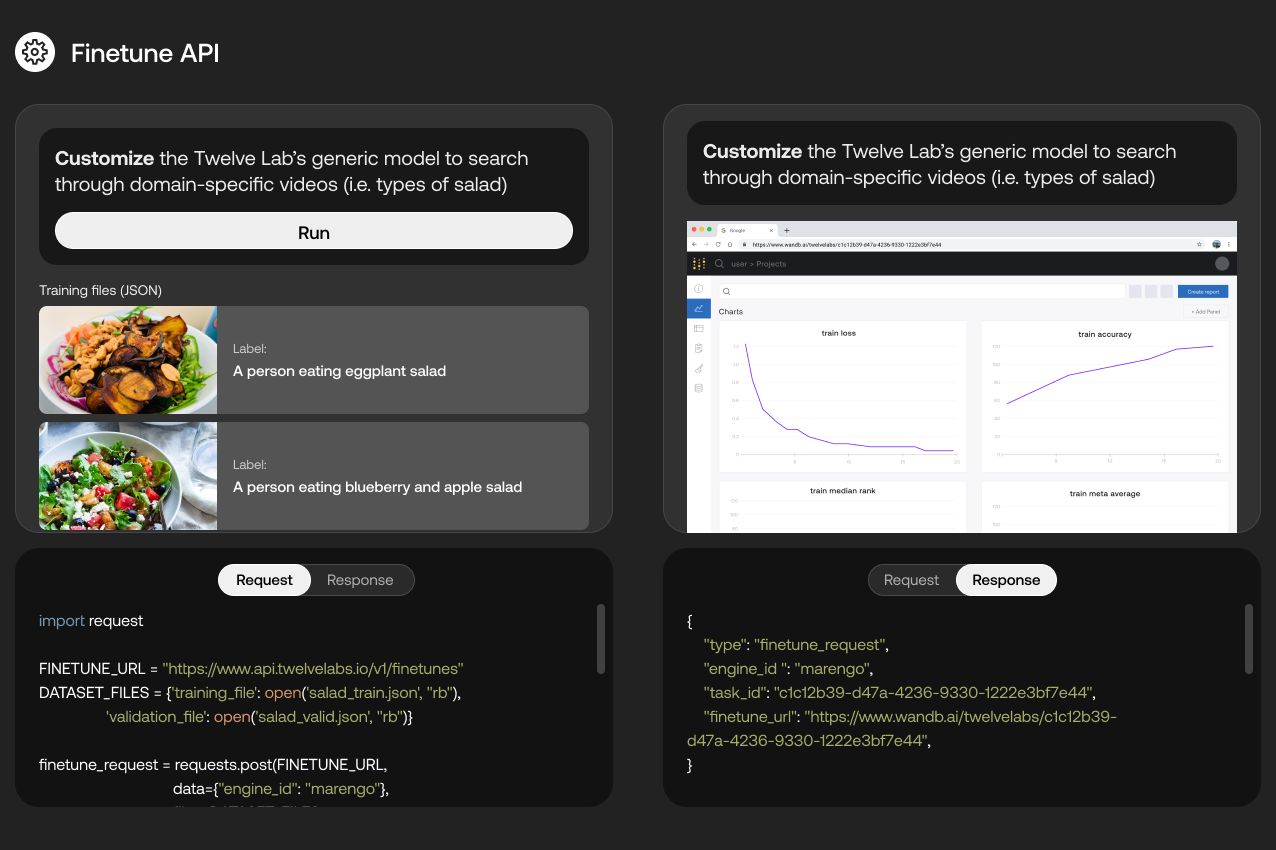

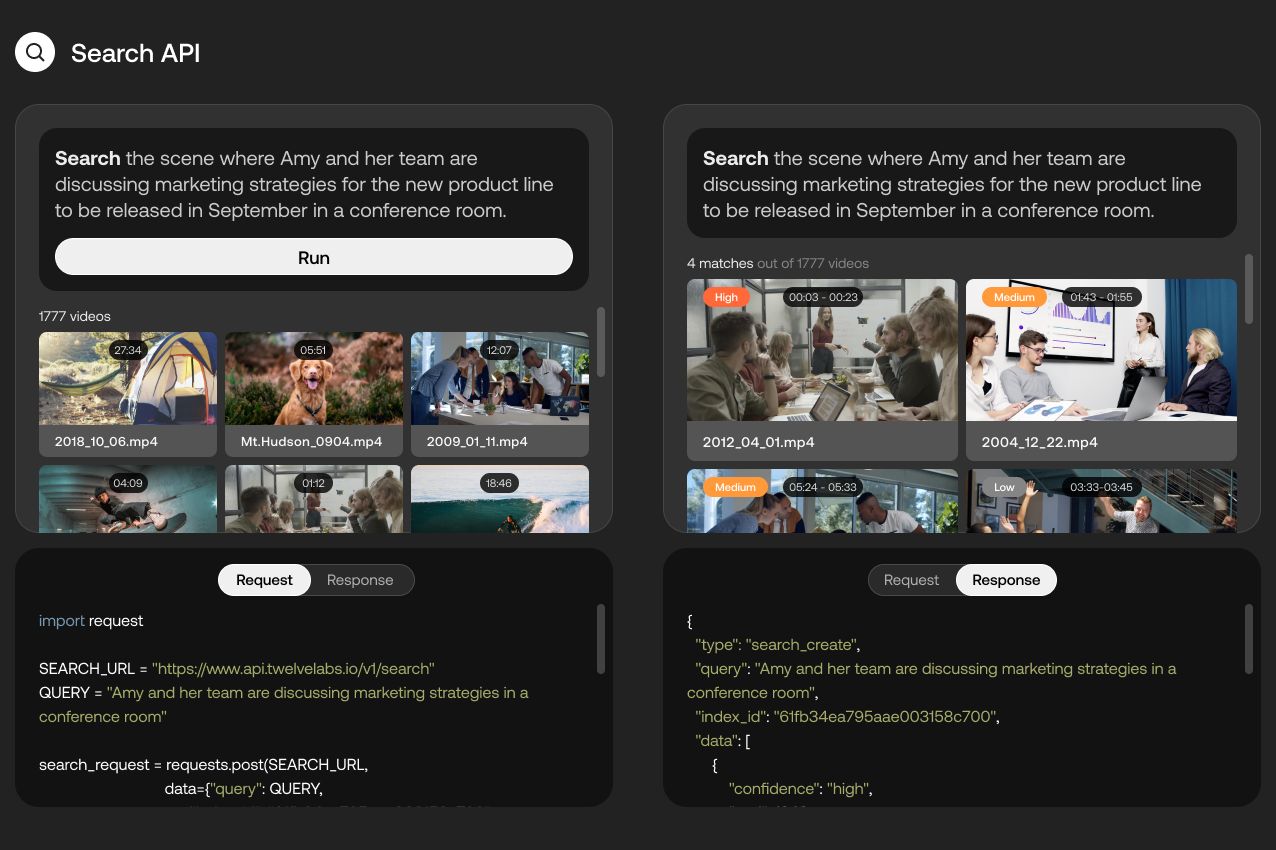

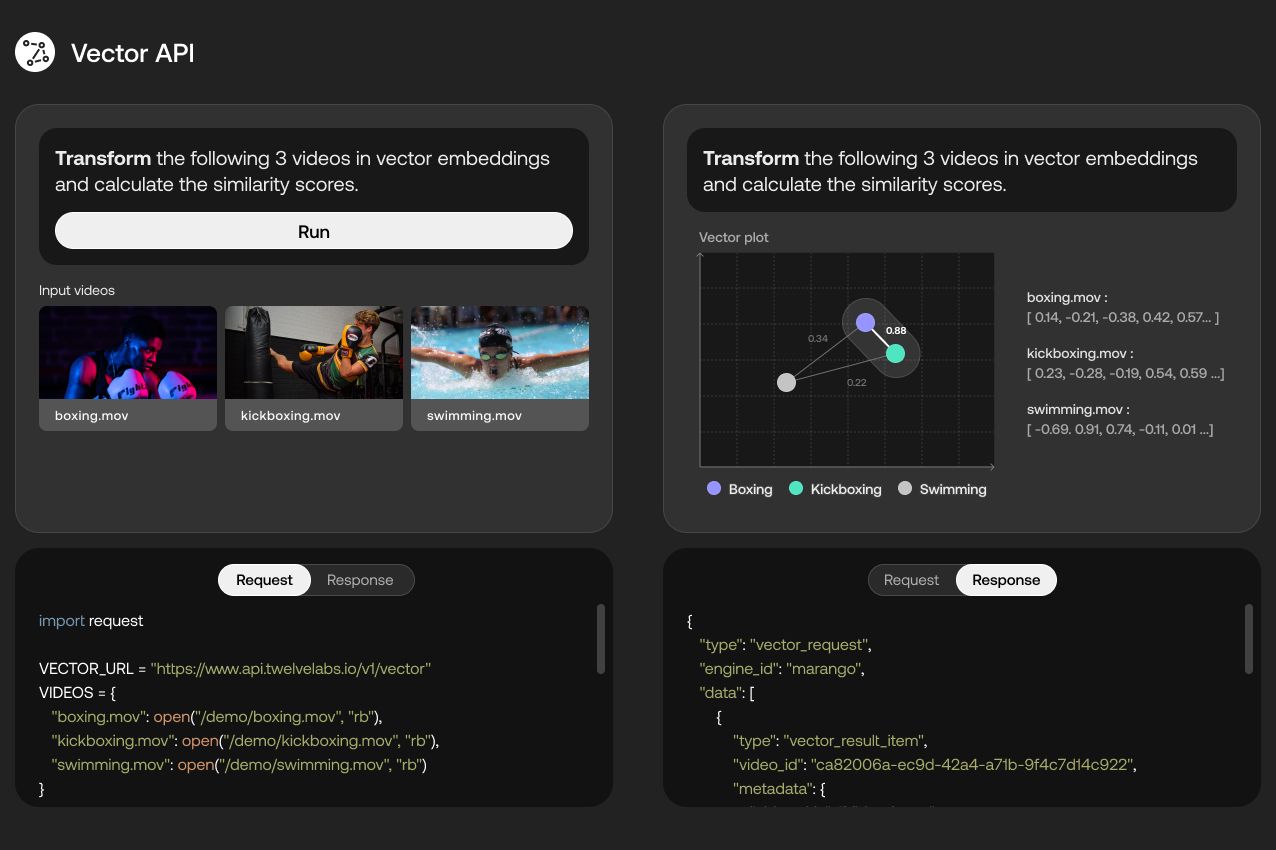

Twelve Labs Product Images, Finetune, Search & Vector APIs

Twelve Labs Product Images, Finetune, Search & Vector APIs

Twelve Labs Product Images, Finetune, Search & Vector APIs

“Twelve Labs is enabling the next generation of these video-centric applications to build dramatically improved features around discovery, personalization, recommendations, content moderation, explainability, and more.”

— Kelly Toole, Index Ventures

It is undeniable, videos are becoming the most important way we consume, share, and store information online. Video-centric applications have exploded in popularity across almost every product category imaginable. TikTok users spend an average of 52 minutes a day on the app. Birthday parties, concerts, and even weddings are taking place on Gather. More than 500 hours of video are uploaded to YouTube every minute. Zoom surpassed 300M daily video-meeting participants. Video intelligence tools like Gong and BrightHire are revolutionizing the way we sell and hire.

Twelve Labs is enabling the next generation of these video-centric applications to build dramatically improved features around discovery, personalization, recommendations, content moderation, explainability, and more.

We are thrilled to partner with Jae, Aiden, Soyoung, SJ, Dave, and their Twelve Labs team as they redefine what’s possible with video search and understanding.

Published — March 16, 2022

-

-